A structured overview of Andrej Karpathy's Microsoft Build 2023 talk, deeply understanding GPT's training process, development status, the current LLM ecosystem, and future outlook.

The State of GPT

This article is a structured summary of Andrej Karpathy’s Microsoft Build talk in May 2023. The presentation slides (Beamer) are available at: https://karpathy.ai/stateofgpt.pdf

The talk covers GPT’s training process, its current development, the LLM ecosystem, and future outlook. Even a year later, it remains highly relevant and offers a great framework for analyzing current developments.

Overview

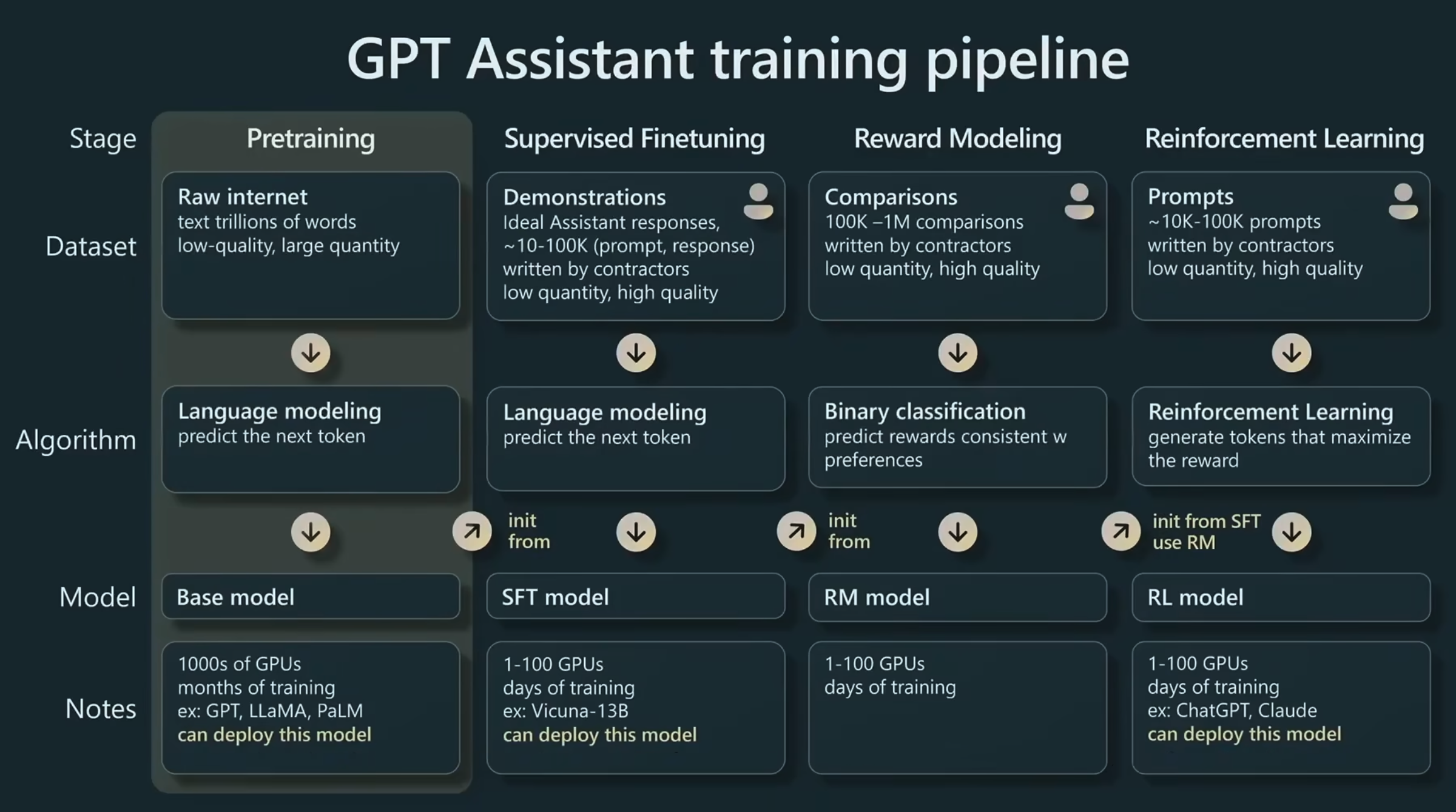

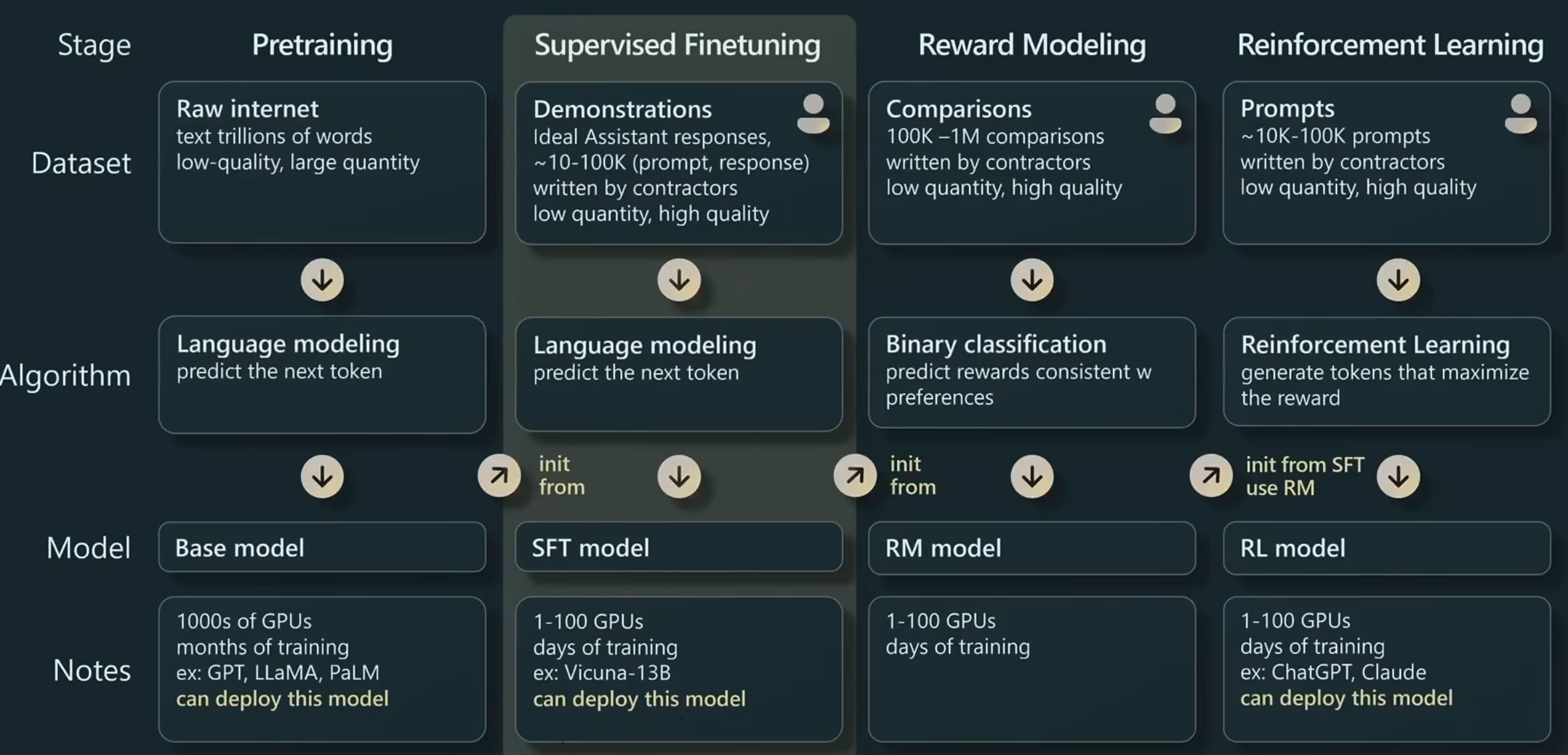

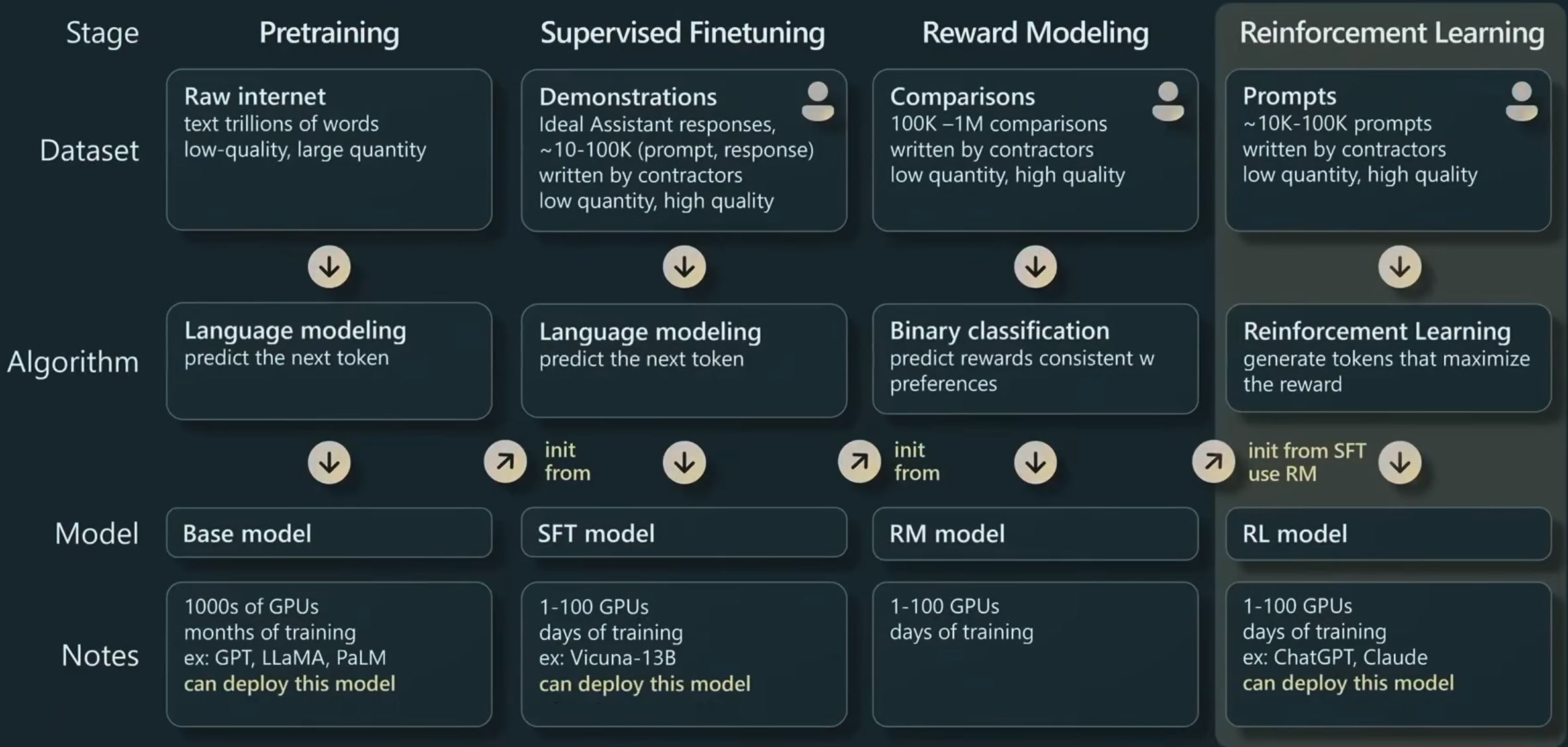

Pre-training accounts for the vast majority of training time, while the subsequent three stages fall under fine-tuning.

Data Collection

Training data mixture used in Meta’s LLaMA model

Composition of the mixture:

- CommonCrawl: General web crawl.

- C4: A massive, cleaned version of Common Crawl.

- Other high-quality datasets as illustrated.

These resources are blended and sampled based on specific ratios to form the training set for the GPT neural network.

Pre-training

Before actual training, several pre-processing steps are required.

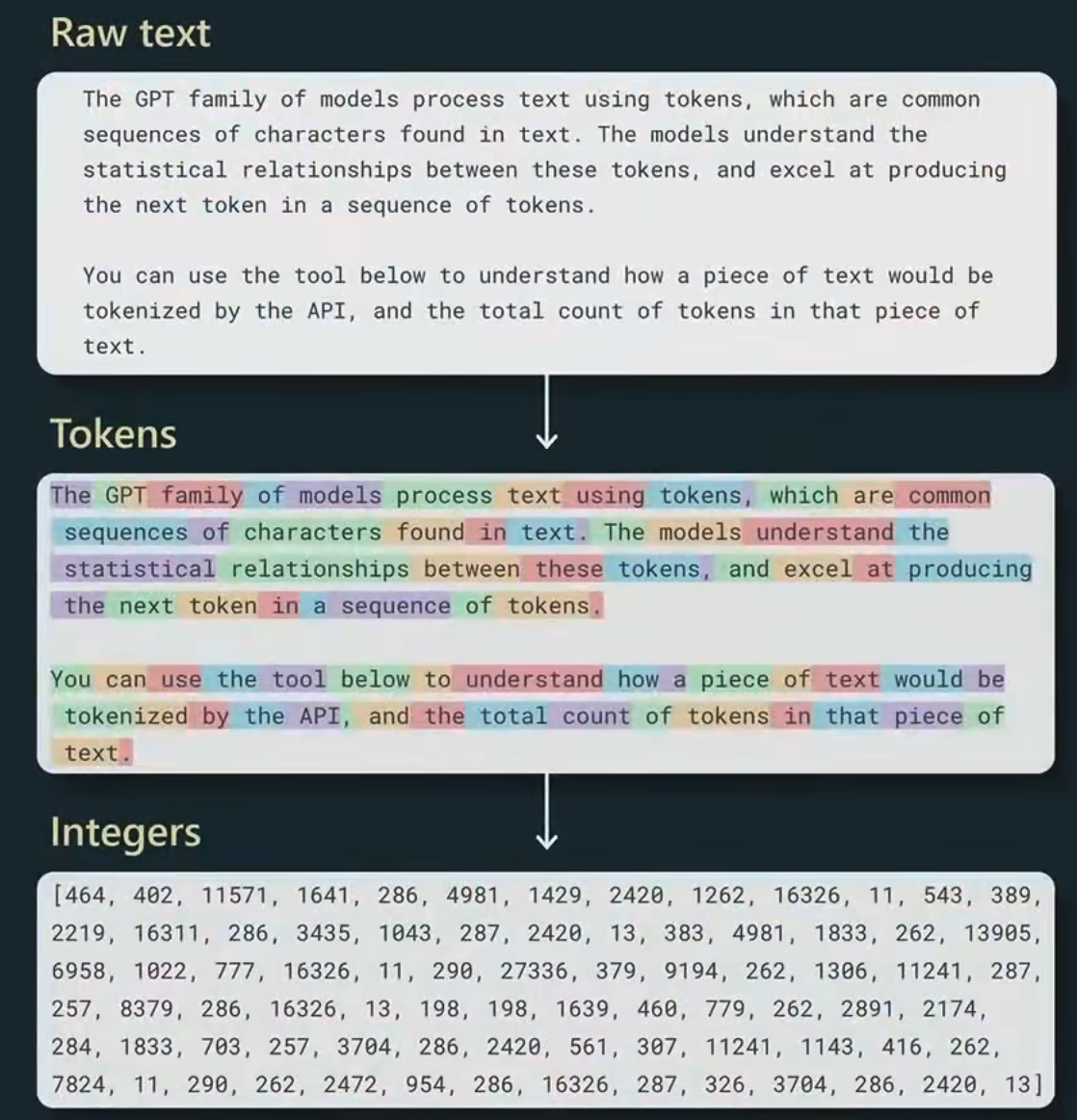

Tokenization

This is essentially a lossless conversion process that translates raw text crawled from the internet into a sequence of integers.

Methods like Byte-Pair Encoding (BPE) are used to iteratively merge small text chunks into groups called tokens.

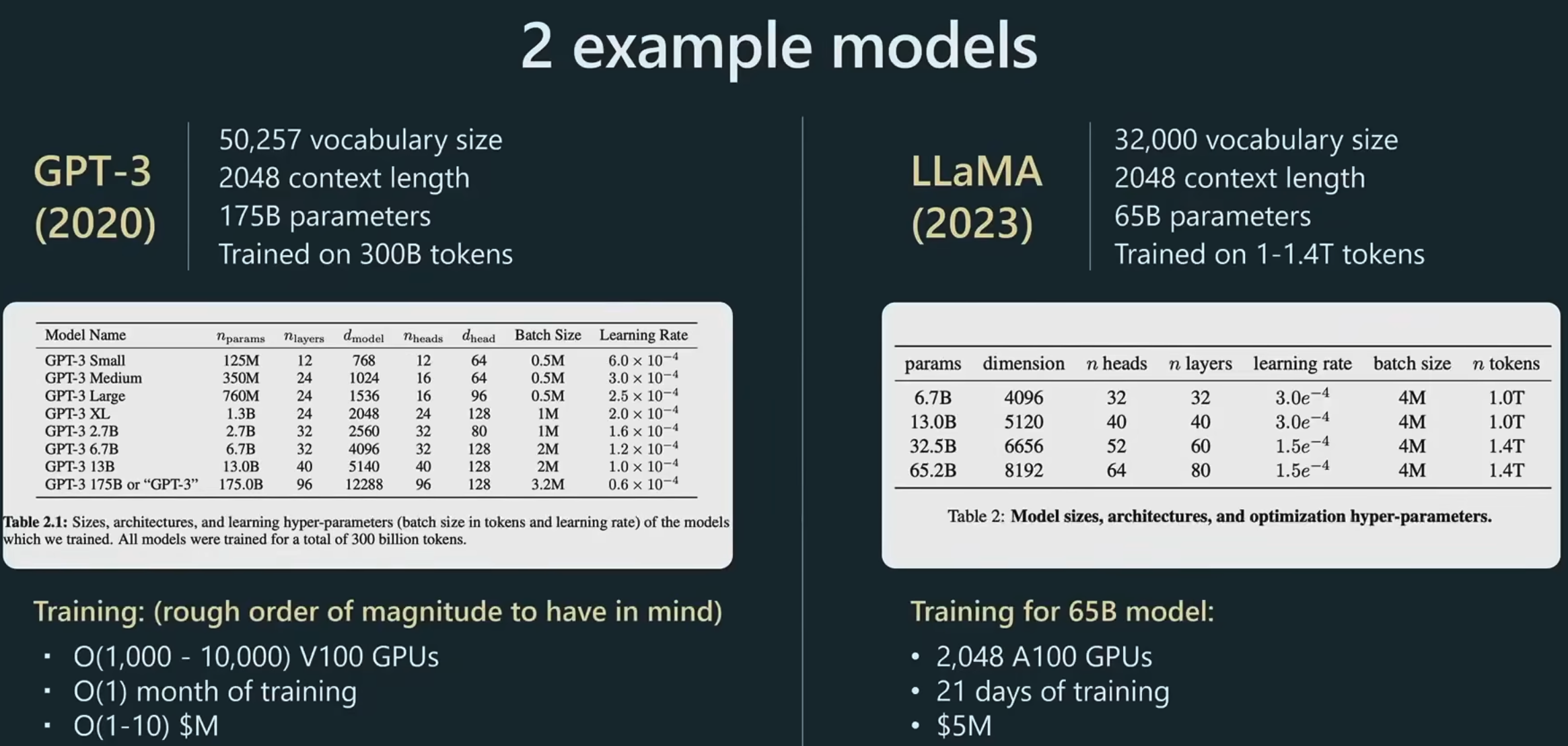

Transformer Neural Network Hyperparameters Table

Vocabulary Size:

- The total number of unique symbols (words, characters, or subwords) the model can recognize and generate. This defines the range of possible inputs and outputs.

- Analogous to the “character table” in Bigram, which had a size of 27.

Context Length:

- The maximum number of units (tokens) the model can consider at once when processing text. It defines how much previous information the model can “look back” at.

- In the NPLM model, context length was set to 3.

- Modern models like GPT-4 can accept over 100,000 tokens.

Parameters:

- The total count of learnable weights and biases adjusted during training to minimize prediction error. It’s a direct metric of model size and complexity.

- Example code from NPLM for counting parameters:

g = torch.Generator().manual_seed(2147483647)

C = torch.randn((27,2), generator=g)

W1 = torch.randn((6,100), generator=g)

b1 = torch.randn(100, generator=g)

W2 = torch.randn((100,27), generator=g)

b2 = torch.randn(27, generator=g)

parameters = [C, W1, b1, W2, b2]

sum(p.nelement() for p in parameters)- Total Training Tokens:

- The total number of tokens the model “sees” and learns from during the entire training process.

- For our Names Dataset, there were ~30k names, which is roughly billion (1B = ).

Notably, LLaMA achieved better results than GPT-3 by training longer on more data, proving that parameter count alone doesn’t determine performance.

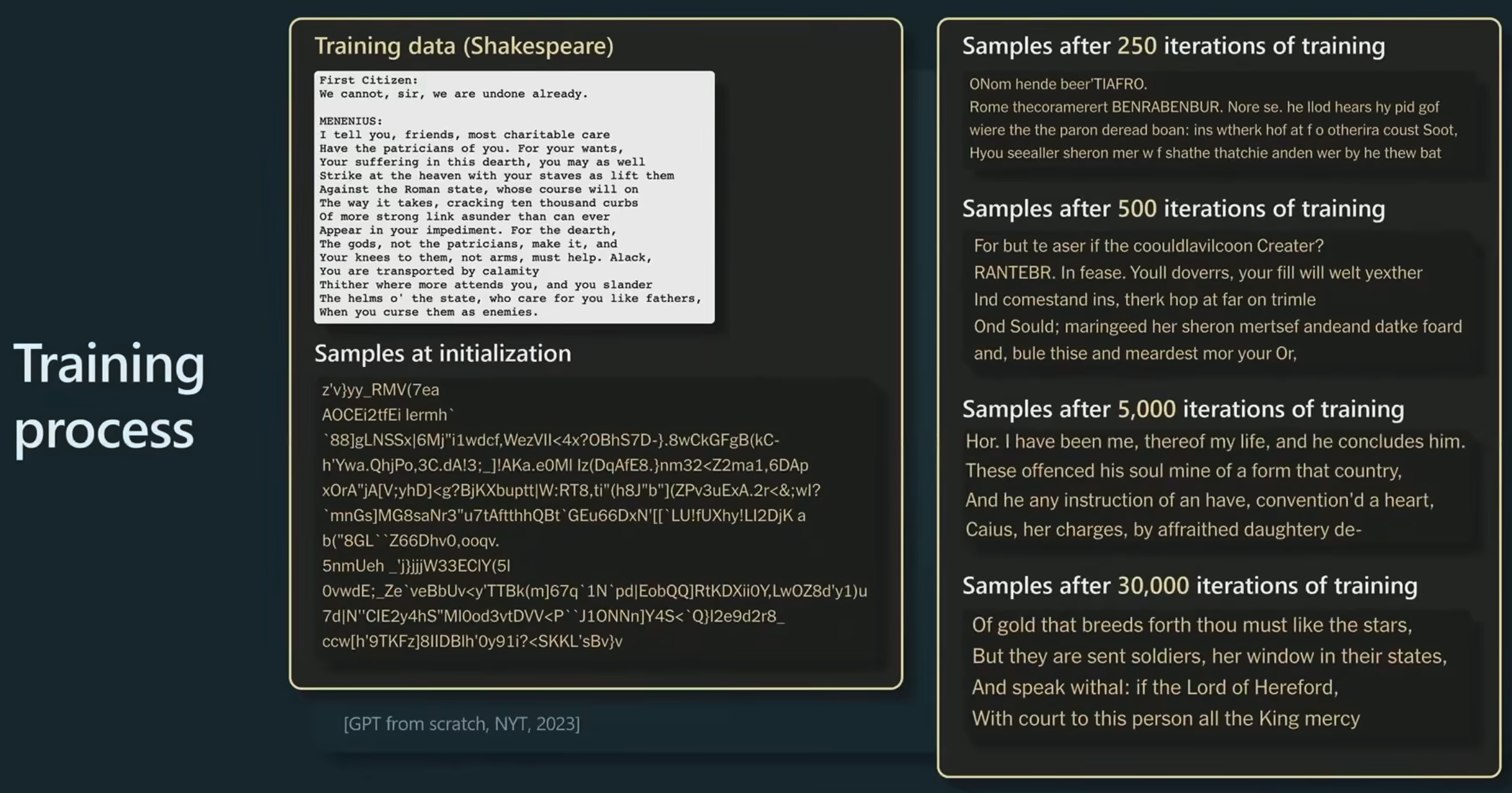

Initially, weights are random, so samples are nonsensical. Over time, samples become coherent. As shown, the model learns words, spaces, and punctuation.

Training Curve

The key metric is the Loss Function. A lower loss means the Transformer assigns higher probabilities to the correct next integer in a sequence.

GPT learns powerful general representations that can be efficiently fine-tuned for any downstream task.

For example, in sentiment analysis, one might traditionally call an API to label data and then train an NLP model. With GPT, you can ignore the specific task during pre-training and then efficiently fine-tune the Transformer using a small amount of data.

This works because the Transformer is forced to perform massive multi-tasking during language modeling—learning text structure and various concepts to predict the next token. This was the GPT-1 phase.

In the GPT-2 phase, researchers noticed that prompting could actually be better and more effective than fine-tuning.

Base Model ≠ Assistant

Base models are not what we typically use as ChatGPT. APIs like Davinci or 3.5 Turbo are assistants. A true base model just wants to complete documents:

You can turn a base model into an assistant via few-shot prompting—making it look like an interview transcript, for instance. However, this is unreliable, leading to the creation of dedicated GPT assistants.

Supervised Fine-Tuning (SFT)

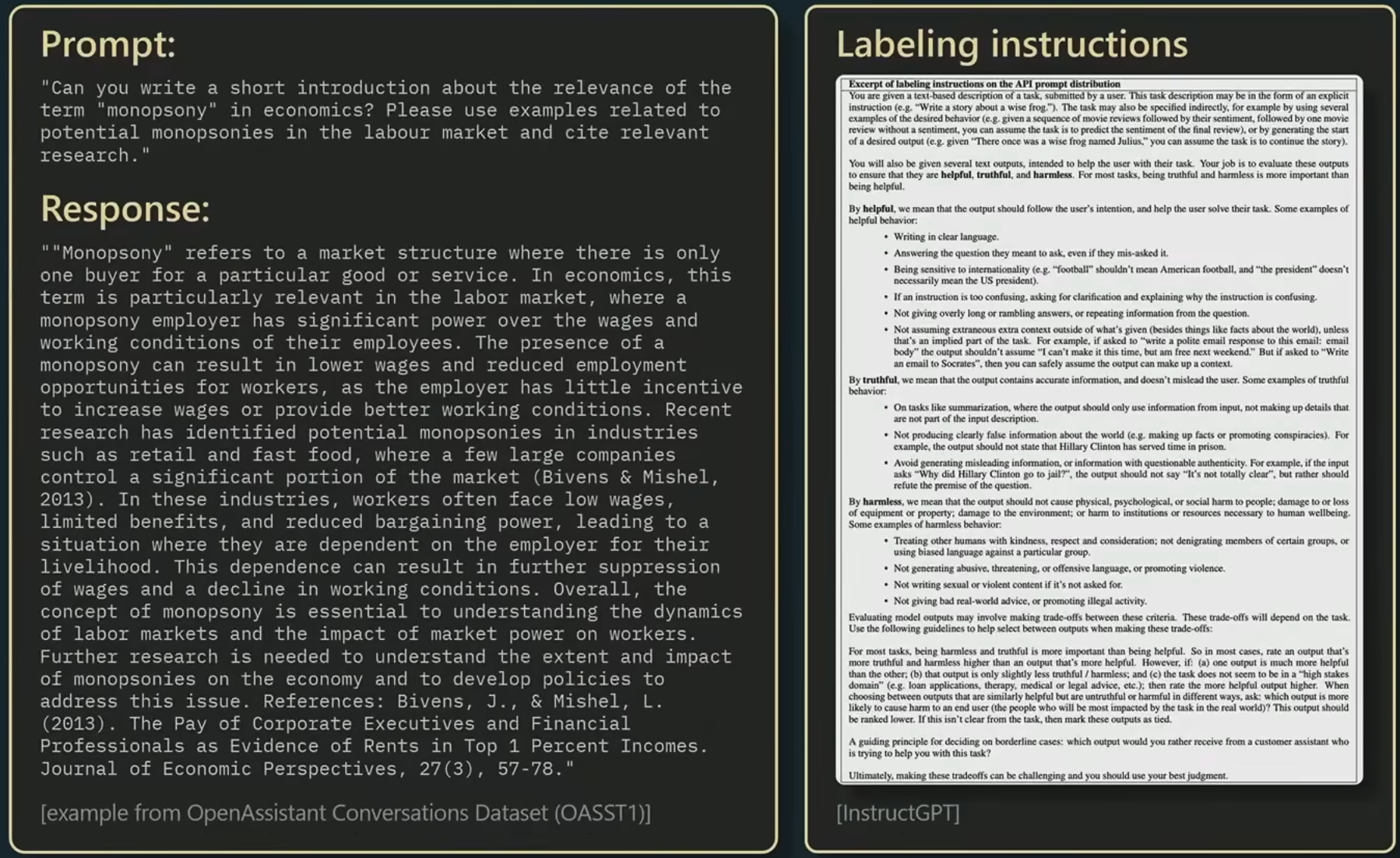

This stage involves collecting small but high-quality datasets of prompts and ideal responses (human-curated). The algorithm remains the same (language modeling), but the dataset changes. This results in an SFT model—a deployable assistant.

Crowdsourced responses are instructed to be helpful, truthful, and harmless.

RLHF Pipeline

Reinforcement Learning from Human Feedback

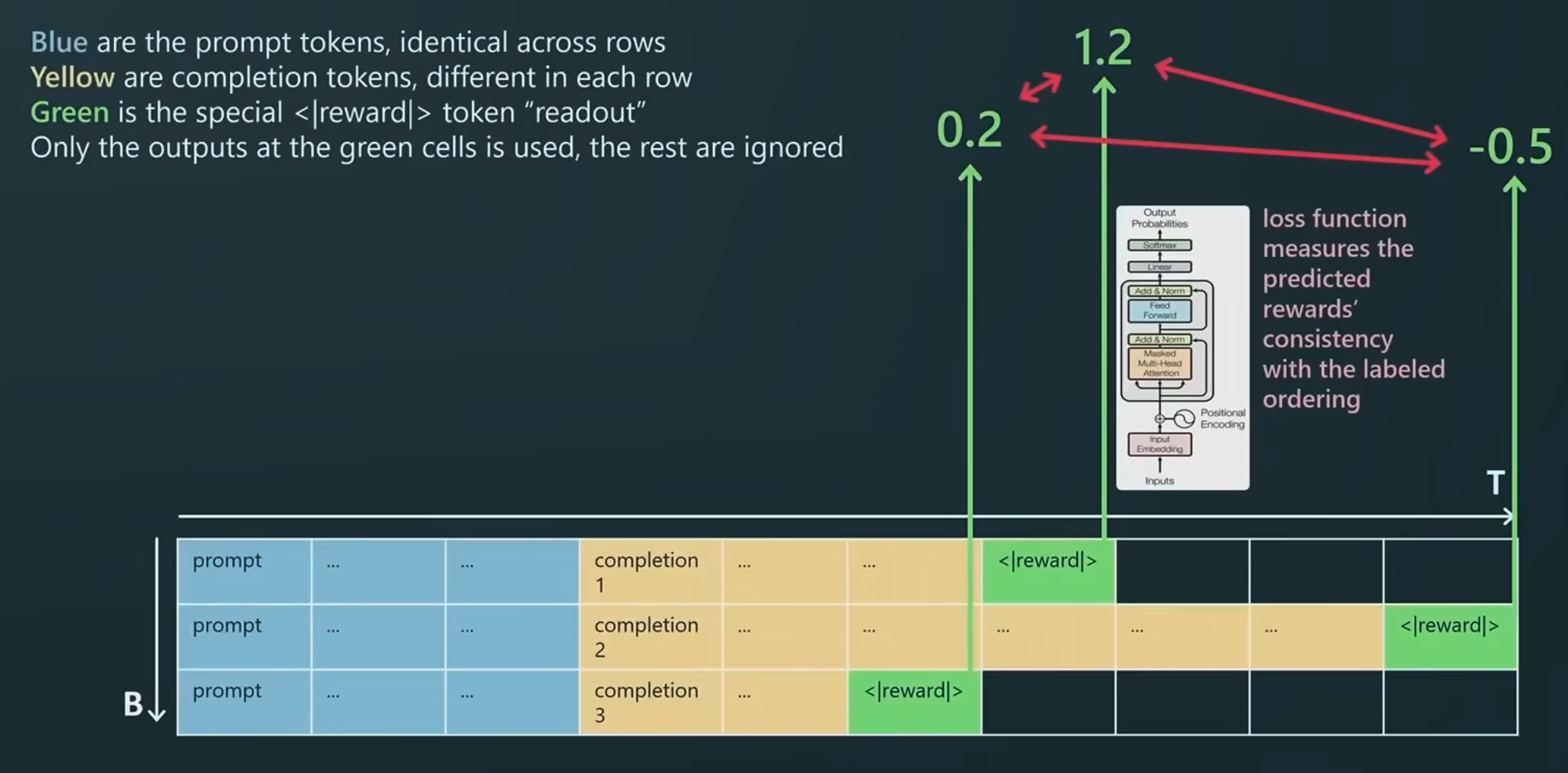

Reward Modeling

In this stage, data is collected as comparisons. For the same prompt, multiple SFT responses are generated, and humans rank them (very labor-intensive).

A Transformer is then trained to predict these rewards by adding a special reward token at the end. The model is trained to minimize the difference between its predictions and human rankings.

Once built, the reward model becomes the scoring mechanism for the next stage.

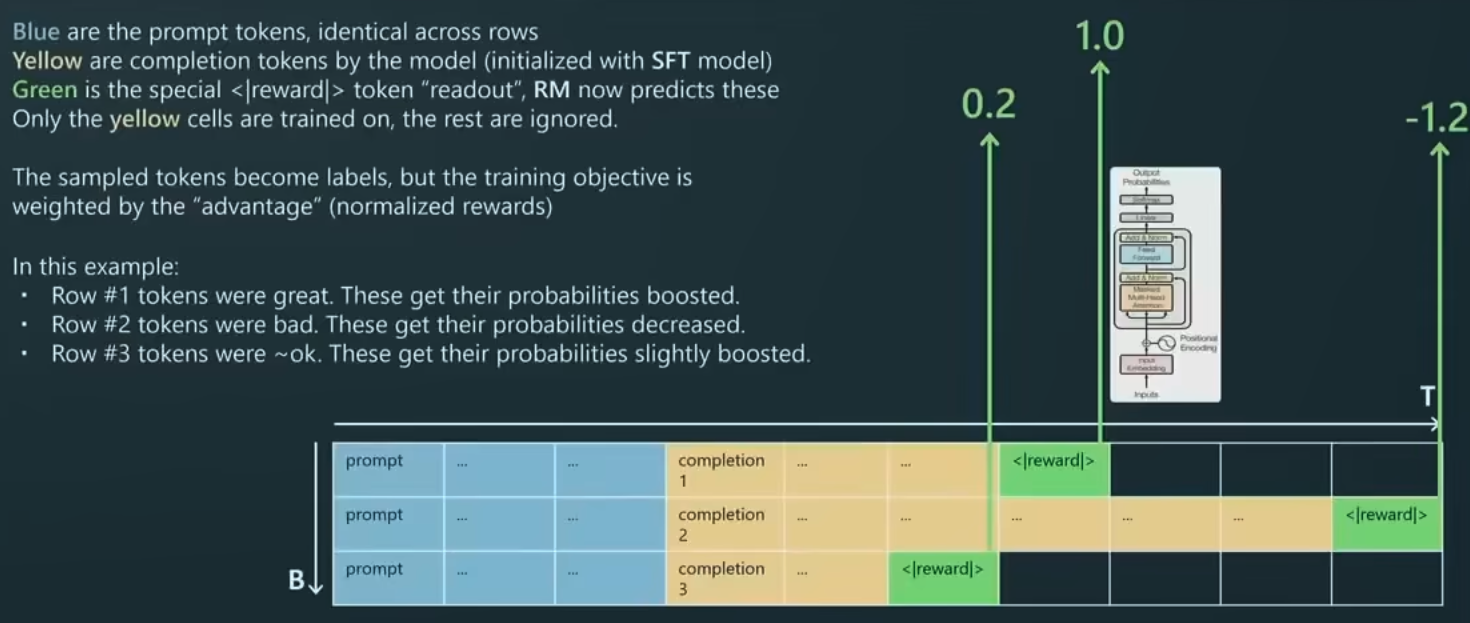

Reinforcement Learning

With the reward model, we can score any completion for a given prompt. RL is then used to optimize the SFT model against the reward model.

The training objective is the completion (yellow). completions are generated, scored by the frozen reward model, and the policy is updated to favor high-scoring tokens.

High rewards increase the weight of those token samplings in the future.

Iterating over many prompts and batches results in an RLHF model.

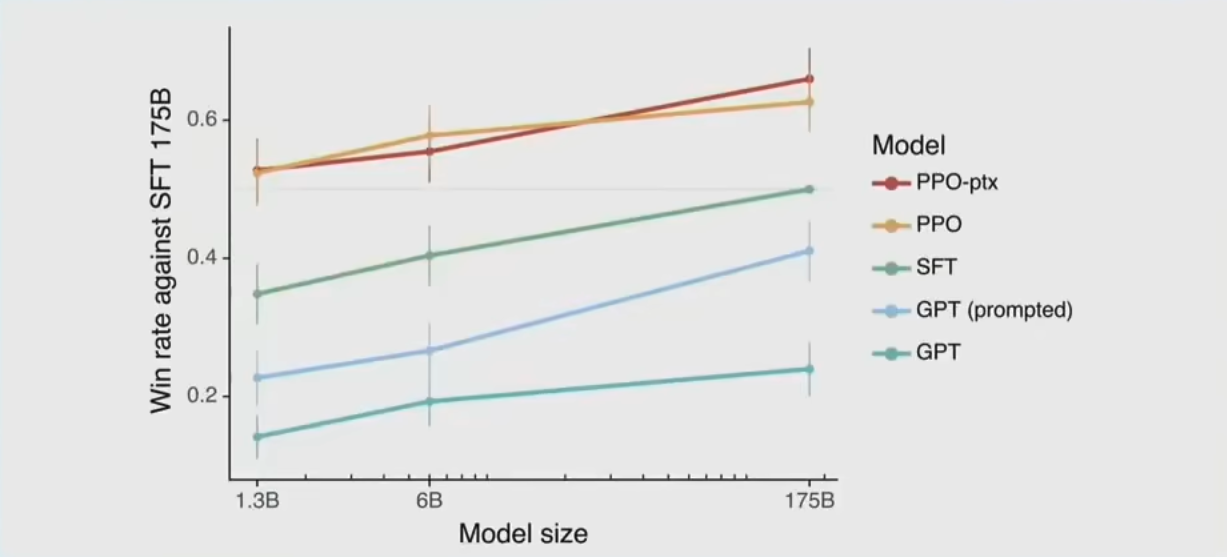

Why RLHF?

Humans prefer RLHF model responses.

One reason: judging comparisons is easier than generation. For example, it’s easier to pick the best of three haikus than to write one from scratch. This leverages human judgment to create better models.

However, RLHF models lose some entropy compared to base models—outputs become more predictable and less diverse. Base models might be better for creative tasks like “generating more Pokemon names.”

Applying LLM Assistants

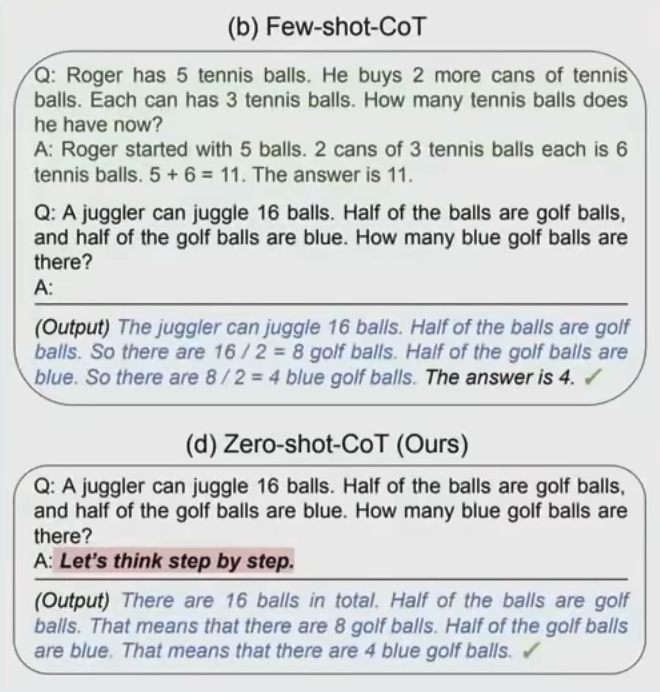

Chain of Thought (CoT)

When humans write, we have an internal monologue—reviewing, editing, and checking our work.

GPT just sees a sequence of tokens and spends equal computation on each. There is no internal monologue or self-correction. Transformers are token simulators; they don’t “know” their strengths or weaknesses. They do not perform feedback, sanity checks, or error correction natively.

GPT has incredible working memory via Self-Attention within its context window (limited perfect memory) and massive factual knowledge stored in billions of parameters.

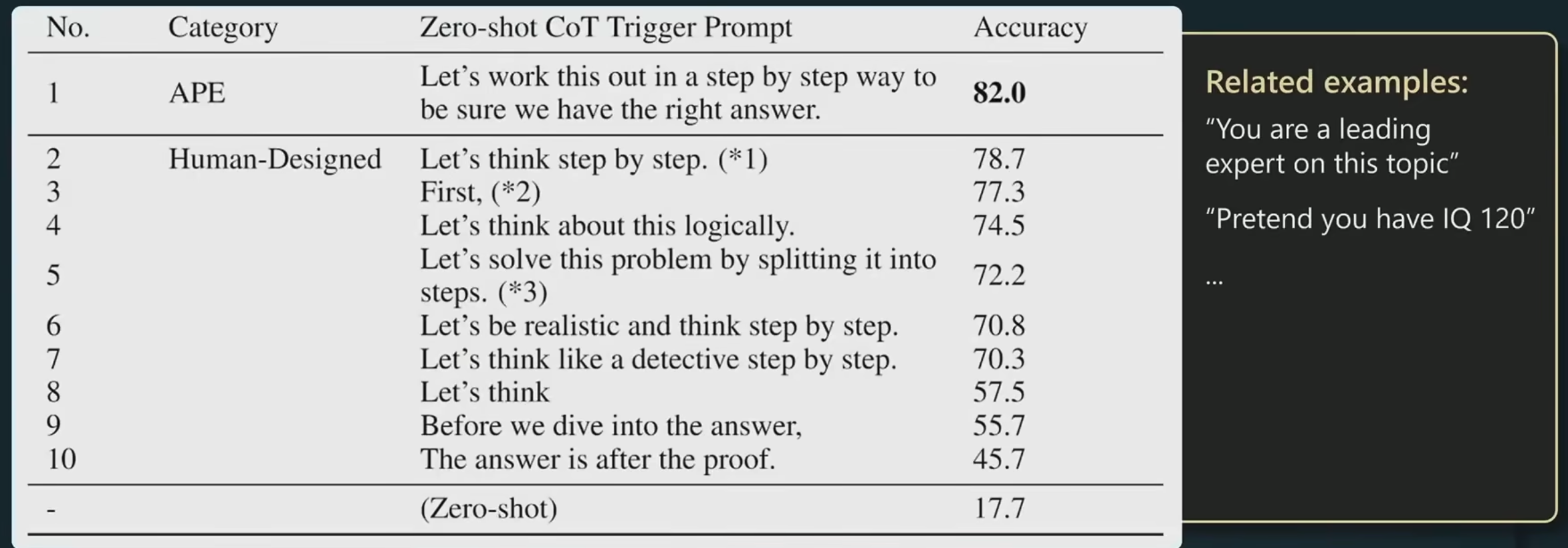

Prompting bridges the cognitive gap between human and LLM “brains.” For reasoning tasks, you must spread the reasoning across more tokens.

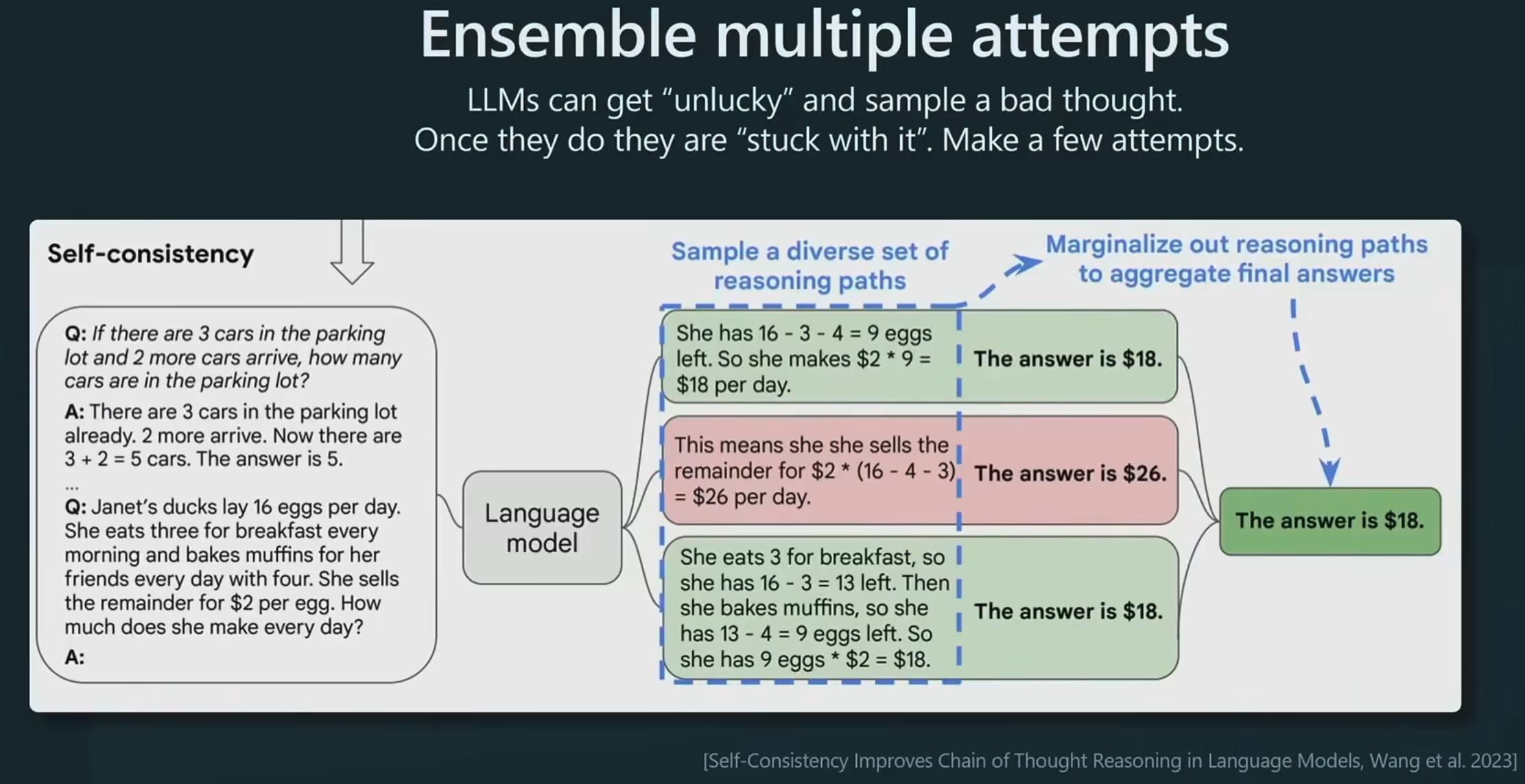

Simulating reasoning

Sampling multiple times and voting on the answer

LLMs will recognize mistakes if you point them out, but they won’t do it autonomously without prompting.

Tree of Thoughts

This extends planning by considering multiple potential paths and using value feedback for decision-making. It includes self-reflection where the model evaluates the feasibility of its own candidates.

This is implemented via “Glue Code” (Python) and specialized prompts, incorporating tree searches.

Like AlphaGo simulating decisions and evaluating possibilities, this is AlphaGo for text.

Condition on Good Performance

Prompt Engineering

LLMs sometimes exhibit a “psychological quirk”—they simulate the training data rather than focusing solely on succeeding at your task.

Example: For a math problem, the training data includes both expert solutions and student errors. The Transformer doesn’t know which to emulate. You must explicitly demand high performance.

This ensures the Transformer doesn’t waste probability density on low-quality solutions.

But don’t ask for “400 IQ” responses, or you’ll get sci-fi answers.

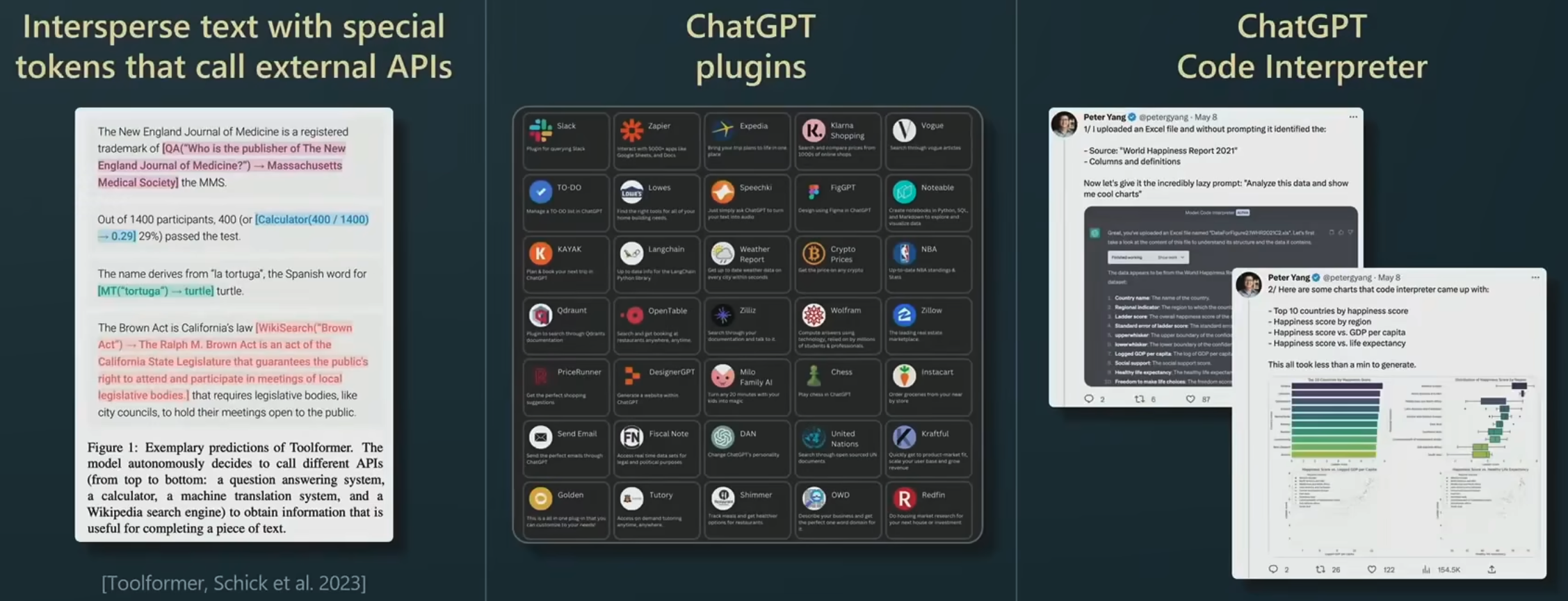

Tools / Plugins

LLMs don’t know what they are bad at by default. You can tell them in the prompt: “You are bad at math; use this calculator API for calculations.”

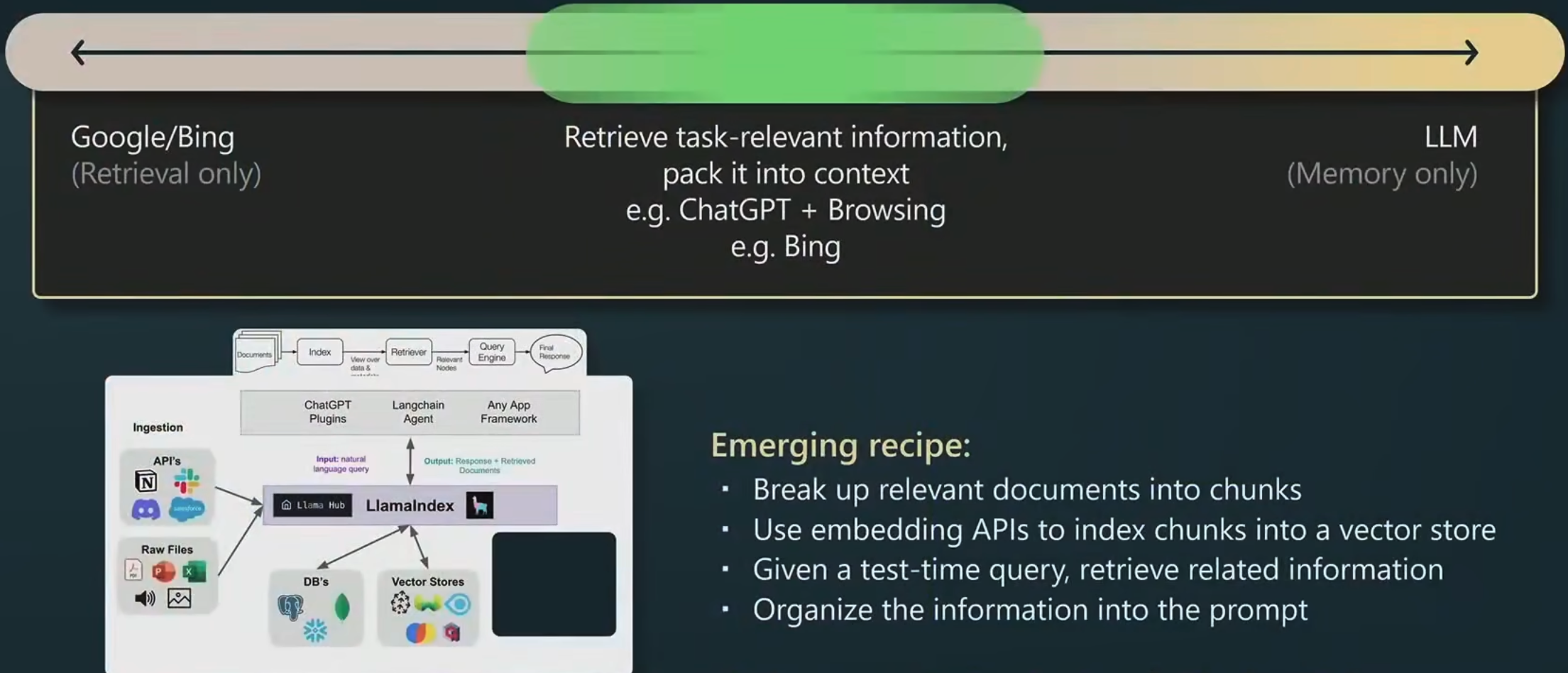

Retrieval-Augmented LLMs

Search engines are Retrieval-only (pre-LLM era). LLMs are Memory-only. There is massive space for Retrieval-Augmented Generation (RAG) between these extremes.

Example: LlamaIndex connects data sources and indexes them for the LLM.

Documents are split into chunks, converted into embedding vectors, and stored in a vector database. At query time, relevant chunks are retrieved and injected into the prompt. It works exceptionally well in practice.

Think of it as a brilliant student who still wants the textbook nearby for reference during a final exam.

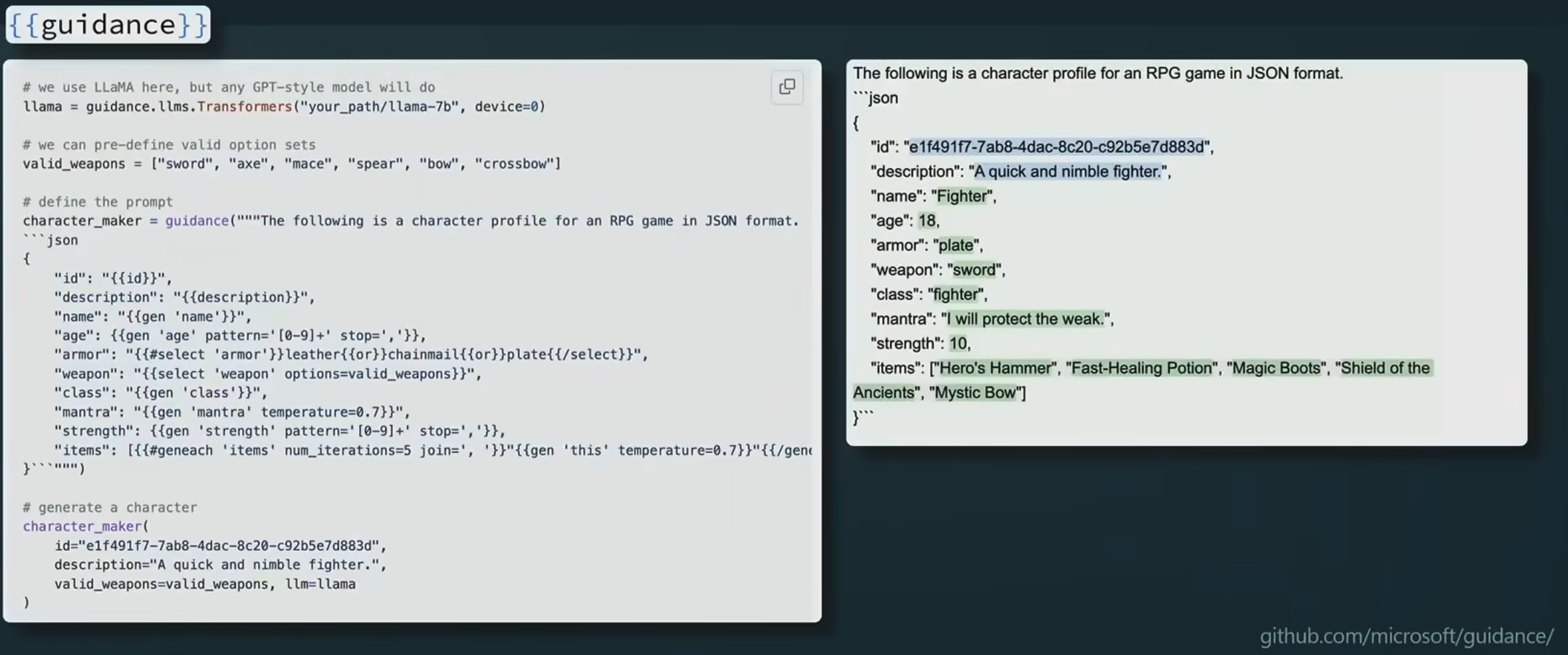

Constraint Prompting

Techniques to force specific output templates (e.g., JSON).

Microsoft’s “Guidance” project.

Fine-Tuning

Fine-tuning changes model weights. Techniques like LoRA make this easier, allowing you to train small, sparse parts of the model with low-precision inference, reducing costs while maintaining performance.

Suggestions

Summary of recommendations for two different goals:

Goal 1: Maximum Performance

- Use GPT-4.

- Design detailed prompts with context, info, and instructions.

- If unsure, ask “How would a human expert do this?”

- Inject all relevant context.

- Use prompt engineering techniques.

- Experiment with few-shot examples (relevant and diverse).

- Use tools/plugins for difficult tasks (math, code).

- Optimize workflows/chains.

- Consider SFT data collection and RLHF fine-tuning if prompting is maximized.

Goal 2: Cost Optimization

- Once peak performance is achieved, try cost-saving measures (shorter prompts, GPT-3.5, etc.).